HPCC Systems 6.0.x Feature Highlights Part 1

HPCC Systems 6.0.x is a major release containing a number of wide ranging new features and enhancements, some of which are changes to the underlying architecture of the system. Our early adopters have been helping us to locate issues which tend to only surface with wider use. If you’re one of those users, we thank you for your feedback and are pleased to announce that the latest 6.0.x release is now available on our website. For a full list of changes between 6.0.0. and the latest release, see the changelogs provided.

This is the first of 2 blogs outlining the feature highlights of the HPCC Systems® 6.0.x release. You can find the second blog here and more information about the multicore capabilities of HPCC Systems 6.0.x series here.

Dali replacement for workunit storage – https://hpccsystems.atlassian.net/browse/HPCC-12251

The new Apache CassandraTM data store facility provides an alternative solution for storing workunit information, which has been one of Dali’s functions since HPCC Systems was created 11 years ago. There are a number of reasons why we need to make changes in this area, the most central reason being that Dali is a single point of failure which does not support redundancy, hot swap or failover. While Dali has held its own admirably over the years (and continues to do so), we must think about the future and the need to plan for and respond to the challenges of ever increasing amounts of storage capacity that is needed to meet the demands put on Dali, which eventually may impact its reliability and maintainability.

There are also scalability issues to consider in that Dali has to fit in memory on one machine and so its performance cannot be enhanced by adding more nodes.

HPCC Systems 6.0.0 is the first release to contain an option to use a Cassandra database in place of Dali for storing workunit information. This option is configured via the environment.xml file and when in use, it is transparent to users. We have also provided a method (wutool) for migrating existing workunits from a Dali store to a Cassandra one or vice versa. Our expectation is that from a capacity point of view, there will be no need to archive off workunits once you start to use the Cassandra store so in HPCC Systems 6.0.0, our workunit archive storage component (Sasha) will not attempt to do this.

All other information, such as logical file, queue, locks, environments and group details, remains in DALI. You can find out how to install and configure your Cassandra datastore and use wutool by reading the relevant section of the HPCC Systems Administrators Guide.

Virtual-slave thor – https://hpccsystems.atlassian.net/browse/HPCC-8710

This new feature provides the ability to configure thor to run multiple virtual slaves (aka channels).

In previous versions, Thor could run multiple thorslaves per node using the setting slavesPerNode but none of the slaves could share RAM or any other resources and were forced to have a predefined slice of the total memory. Another downside of this approach, was that slaves did not share any resources and were forced to have a predefined slice of the total memory. It also meant that communication between master and slaves had to be done by broadcasting the same information to each slave which were completely unaware of one another.

The new implementation means each thorslave process will be able to run multiple virtual slaves within the same process allowing the sharing of a number of useful resources:

- All virtual slaves share a common memory pool

This means that instead of every slave being tied to a limited slice, it is be possible that some can use more than others where total resources permit it. The upshot of this is that there will be less overall spilling, i.e. Thor will utilize a greater percentage of total memory at each phase. Also, there is greater control over what spills and when spilling can take place. - Collective caching

Thor slaves cache various things. In the new virtual slave setup, the caching will be shared, meaning it can be larger and have more cache hits. Index key page caching is one example. - Activity implementations can take advantage of the single process, multi channel view

One of the best examples of this is probably the Lookup Join & Smart Join, which builds a table of the global RHS and broadcasts it to all other nodes. The old process per slave approach meant than there were N copies of the RHS table on each physical node, limiting the size that could be used in a Lookup Join to a fraction of what it could have been. In the new virtual slave implementation, only 1 of the RHS copies will exist, meaning a LookupJoin can actually utilize all of memory. It also means that the RHS is no longer broadcast N times to the same physical node, saving on network traffic and time. The upshot is that Lookup/Smart join will be faster and scale to greater sizes. Many other activities will also be able to take direct advantage of the single shared process, for example, PARSE, which can share the same parsed AST between virtual slaves. - More efficient communication

In the initial version, the virtual slaves will still communicate to a large extent via their own MP port/listener, that communicates over the loopback device (as they did before). However future versions will involve more direct slave to slave communication, which will not only avoid using the network interface and MP, but involve a lot less costly serialization and deserialization of rows. - Improved CPU utilization

In the process per slave world, highly parallel tasks like sort tend to use all available cores. Where there are multiple slaves per node, this results in the inefficient over commitment of the CPU/core’s available. In the new virtual slave scheme, all slaves will be able to use a common pool and collaborate to increase utilization and avoid contention. - Simplified startup and configuration

The old process per slave startup process, required that the launching and co-ordination of N processes per node that registered with the master was scripted. Thor was unaware on the whole that the slaves co-existed on the same node which made the start/stop cleanup process and scripts overly complicated. The new setup should mean that the Thor cluster scripts can be streamlined (there are fewer processes to be monitored and maintained). - Looking into the future

Future enhancements will bring better management and co-ordination of CPU cores.

There’s a separate blog about the new multicore features in HPCC Systems 6.0.0.

New Quantile Activity – https://hpccsystems.atlassian.net/browse/HPCC-12267

This new activity is a technical preview in HPCC Systems 6.0.0. It can be used to find the records that split a dataset into two equal sized blocks. Two common uses are to find the median of a set of data (split into 2) or percentiles (split into 100). It can also be used to split a dataset for distribution across the nodes in a system. One hope is that the classes used to implement quantile in Thor can also be used to improve the performance of the global sort operation. Gavin Halliday has written a blog series on this feature:

- What does it take to add a new activity?

- Quantile 2 – Test cases

- Quantile 3 – The parser

- Quantile 4 – The engine interface

Security enhancements in the compiler

Versions of HPCC Systems prior to 6.0.0 have always allowed some control over which operations were permitted in ECL code. This was done, primarily, as a way to ensure that operations like PIPE or Embedded C++ could not be used to circumvent access controls on files by reading them directly from the operating system. In HPCC Systems 6.0.0, we have implemented two features to provide more flexibility over the control of operations.

- We now limit which SERVICE functions are called at compile time using the FOLD attribute.

https://hpccsystems.atlassian.net/browse/HPCC-13173 - You can configure the access rights (which control the ability to use PIPE, embed C++, or declare a SERVICE as foldable) to be dependent on the code being signed. This means that we can provide signed code in the ECL Standard Library that makes use of these features, without opening them up for anyone to call anything.

https://hpccsystems.atlassian.net/browse/HPCC-14185

The way that code signing works is similar to the way that emails can be signed to prove that they are from who they say they are and they have not been tampered with, using the standard gpg package. A file that has been signed will have an attached signature containing a cryptographic hash of the file contents with the signer’s private key. Anyone in possession of the signer’s public key can then verify that the signature is valid and that the content is unchanged.

So, in the ECL scenario, we will sign the SERVICE definitions provided by the ECL standard plugins and include the public key in the HPCC platform installation. Code that tries to use service definitions that are signed will continue to work as before but, code that tries to call arbitrary library functions using user-supplied SERVICE definitions will give compile errors.

Operations/system administrators can install additional keys on the eclserver machine, so if you want to use your own service definitions, they can be signed using a key that has been installed in this way. Using this method, a trusted person can sign code to indicate that it is ok for untrusted people to use, without allowing the untrusted people to execute arbitrary code.

TRACE Activity – https://hpccsystems.atlassian.net/browse/HPCC-12872

A new TRACE activity is now available which provides a way of putting ‘tracepoints’ in to ECL code that will cause some or all of the data going through that part of the graph to be saved into the log file.

Tracing is not output by default even if TRACE statements are present. The workunit debug value traceEnabled must be set or the default platform settings changed to always output tracing.

In Roxie you can also request tracing on a deployed query by specifying traceEnabled=1 in the query XML. So you can leave trace statements in the ECL without any detectable overhead until tracing is enabled. Tracing is output to the log file, in the form:

TRACE: value...

The syntax to use is:

myds := TRACE(ds [, traceOptions]);

The available options are:

- Zero or more expressions, which act as a filter. Only rows matching the filter will be included in the tracing.

- KEEP (n) indicating how many rows will be traced.

- SKIP (n) indicating that n rows will be skipped before tracing starts.

- SAMPLE (n) indicating that only every nth row is traced.

- NAMED(string) providing the name for the rows in the tracing.

It is also possible to override the default value for KEEP at a global, per-workunit, or per-query level.

#option (traceEnabled, 1) // trace statements will be enabled #option (traceLimit, 100) // overrides the default keep value (10) for activities that don't specify it.

Note: You can use a stored boolean as the filter expression for a trace activity. This can allow you to turn individual trace activities on and off.

DFUPLUS restore super files feature – https://hpccsystems.atlassian.net/browse/HPCC-14111

We have extended the DFUPLUS feature allowing users to restore all the files from a previous session to include the ability to export and restore superfiles.

Init System improvements

Building on the improvements made in 5.4.0, here are a few more features we have added to building and using HPCC Systems from an operational standpoint easier:

- If you want to use a different jvm for the Java plugin, you can now do this by specifying the jvm you want to use in the environment.conf file. Simply assign the absolute path of the libjvm.so to the JNI_PATH variable. When the component starts, it will be automatically recognized and linked to.

https://hpccsystems.atlassian.net/browse/HPCC-12943

https://hpccsystems.atlassian.net/browse/HPCC-14245 - Administrators have always had the ability to save attributes for a roxie or thor component in the environment.xml, however, on startup, the system would try to start the unlisted components which could cause confusion. Now, the component will only start if it’s also declared in the topology section of the environment.xml.

https://hpccsystems.atlassian.net/browse/HPCC-13844 - We have added some checking features to detect configgen failures and produce improved error messages with better information about the problem found. We are also working on further improvements to distinguish between errors and warnings. This will mean that valid scenarios that users may want to experiment with from a testing perspective will throw a warning but will not stop a component from starting, which is what currently happens.

https://hpccsystems.atlassian.net/browse/HPCC-13980

New ESP service methods

Two new ESP service methods are now available in HPCC Systems 6.0.0:

- WSESPControl.SetLogging

Previously, to make changes to the logging level on an ESP, the logging level had to be changed in the environment.xml and a restart of the ESP was required. Using this feature, an admin user may temporarily change the ESP logging level when an ESP is running. The newly set logging level remains in use only for as long as the ESP remains running the same session. If the ESP is restarted at any time, the ESP logging level reverts back to the original logging level defined in environment.xml.

https://hpccsystems.atlassian.net/browse/HPCC-12914 - WsWorkunit.WUGraphQuery

Previously, to get a list of graphs, a call to WsWorkunit.WUInfo was needed which uses a lot of ESP time and resources. We have implemented this new method to make it easier for you to get the list of graphs and the say what kind of graphs you want listed.

https://hpccsystems.atlassian.net/browse/HPCC-13939

DynamicESDL support for writing web-services in JAVA – https://hpccsystems.atlassian.net/browse/HPCC-14255

This new feature is a technical preview for HPCC Systems 6.0.0. You can now write ESP web-services in the JAVA language using Dynamic ESDL. Creating a JAVA service is made simple by the generation of Java base classes after the ESDL interface has been defined. A DynamicESDL web-service can now contain a mixture of operations written in JAVA and those written in ECL (running on roxie).

Once the HPCC Systems platform is installed, configure an instance of DynamicESDL to run on ESP using configmgr. The steps are documented in this manual: Learning ECL

For this technical preview, the documentation (including the ReadMe.txt) assumes that you are using port 8088 which we recommend you do to make following the walkthrough example easier. The DynamicESDL example provided, includes a walkthrough of creating a JAVA service and is located here: /opt/HPCCSystems/examples/EsdlExample.

Additional Nagios monitoring feature – https://hpccsystems.atlassian.net/browse/HPCC-14188

HPCC Systems 6.0.0 includes an additional feature in this area which means that if you have Nagios setup on your system, it will now carry out thormaster process checks and report the status back, which you can clearly see via the monitoring interface now built in to ECL Watch.

100% Javascript Graph Viewer – https://hpccsystems.atlassian.net/browse/HPCC-12805

A technical preview of this was launched in HPCC Systems 5.2.0 in response to the phasing out of NPAPI support, which would eventually leave most modern browsers unable to display graphs in ECL Watch. In HPCC Systems 6.0.0, the new 100% Javascript Graph Viewer will be the default.

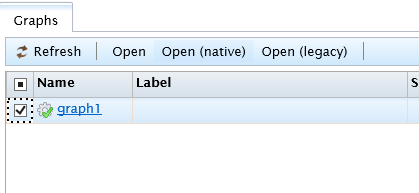

However, the old default graph page is still available from the Graphs tab in ECL Watch using the Open (legacy) option which will use the ActiveX control if available, otherwise it will use the JS graph control. You can also use the new graph page with the ActiveX graph control if its available, using the Open (native) option.

One great advantage of the new Javascript Graph Viewer is that it does not require an additional installation process because it runs automatically within your browser. Another is that it opens up opportunities for us to improve the quality of information we can supply about your job via the graph in the future, such as where time was spent, where memory usage was the largest, significant skews etc.

Notes:

- Download the latest HPCC Systems release and ECL IDE/Client Tools.

- Read the supporting documentation.

- Take a test drive with the HPCC Systems 6.0.x VM.

- Tell us what you think. Post on our Developer Forum.

- Let us know if you encountered problems using our Community Issue Tracker.

- Read a blog about the multicore capabilities of the HPCC Systems 6.0.x series.