Battery Powered Big Data

Recently, the HPCC platform team held one of our offsite conferences, which usually involves us disappearing into the wilderness somewhere for a week. So, leaving the day to day pressures behind, we all decamped to a remote cottage in the frozen North of England to discuss how to improve HPCC Systems as well as future development plans. Something unusual often comes out of these gatherings and this was no exception.

This year, as the Raspberry Pi3 had just been announced the week before the conference, I decided it might be fun to give one to everyone on the team to see what interesting uses they might think of for them. Predictably, the first reaction was ‘Can it run HPCC?’. So over the course of the week, after the main discussions for the day were done, we spent a few hours finding out.

Single node HPCC Systems 5.6.0

Initially progress was very fast. We elected initially to use the 5.6.0 branch as the latest stable release, (available on the HPCC Systems website download page soon).

Once we had installed all the prerequisites as listed on the HPCC Systems Developer Wiki and set the build flags to ignore any that were either not available, for example, TBB, or not relevant, for example, NUMA, the system compiled cleanly at the first attempt.

Starting the system (single node only at this point) revealed the first challenge. Roxie, by default, tries to allocate a 1Gb memory block for its heap, and since the Pi3 only has 1Gb of RAM this failed. However, all other components started ok.

Trying to run a query revealed the second challenge. eclccserver could not read resources out of generated dlls (and crashed while trying). A quick analysis of the stack trace showed that the culprit was the binutils library. We use this library to try to make things like pulling data out of dlls platform independent, but in practice, this has proved to be one of the least portable parts of the system. Fortunately, we had previously developed alternative strategies to be used on platforms where binutils was either unavailable or unreliable, so a quick recompile later with -DUSE_BINUTILS=0 and we were ready to try again.

I say ‘quick recompile’ but the Pi3 compiles took over an hour for a full recompile in release mode, although it was a little faster in debug mode.

So now we were getting a little further. There were no crashes, but we were still unable to extract workunit resources from the dll, with an error report claiming that the dll was not in elf64 format. As indeed it was not.

The Pi3 has a 64-bit processor but as yet, all the software on it including the Debian Jessie Raspbian distro we were using, is still 32-bit. The code in the _USE_BINUTILS=0 codepath was assuming 64bit, but it was only a few minutes work to implement the elf32 support (literally, copying the elf64 support and changing all instances of 64 to 32 was sufficient). We tested again, and we had success! We could run our first query.

Multi-node HPCC Systems 6.0.0

The next challenge was to get a multi-node system running, but before we did, we decided to switch to the 6.0.0 candidate branch for development. We decided that it would be easier to apply any fixes to this branch that might be needed, since the 6.0.0 branch is still under active development and also because it would be a useful testing exercise that might flush out any new issues in 6.0.0. We did indeed uncover some additional dependencies that we now handle more cleanly in 6.0.0.

So, while we experienced a few bumps in the road that we had not seen in 5.6.0, before long we had 6.0.0 running successfully and simple queries running on a 2-node thor.

At this point we hit the first of the bigger challenges. We were using the standard beginner ‘NOOBS’ Raspberry PI distribution on 8Gb SD cards, but by the time we had installed HPCC Systems and all its prerequisites there was not a lot of disk space left.

So it was time to install a more minimal OS image and ‘Ubuntu server minimal’ seemed to fit the bill. However, it came without a configuration (or configuration tools) for the Pi3’s built-in wifi, so we spent a long evening trying to get that working, eventually succeeding.

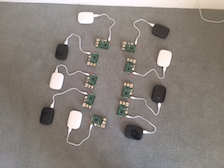

Now we had a massive 4.5 Gb per node free space and we were ready for the final challenge. We wanted to do a distributed sort based on the “terabyte sort”. However, with only 8 thor slave nodes and 4Gb per node free disk space, we would have to scale back a little. 10Gb was settled on as being enough to mean that all nodes would have to spill, while small enough to be able to fit the input data, output data and any spill files into our available disk space.

10GB sort

Just as a sanity check, we started off with 2Gb, which finished successfully and very quickly (too quickly, we later realized). We adjusted the constants for 10Gb, kicked off the job, and waited. And waited. And waited…

An hour later it was still going so we took another look at the 2Gb “sanity check” job to see how it had managed to complete so quickly. In the interests of saving disk space, we had not actually output the sorted result, we had just fed it into a count operation. The code generator had kindly decided that there was no point sorting something that was only going to be counted or reading something from the disk when the count was available in the file’s metadata. So it had optimized the whole job into a single “fetch record count from DALI” operation. Very clever, but not quite what we had wanted on this occasion!

It was also at this point that we noticed we had forgotten to turn off disk replication. This meant that the system was spending a lot of CPU power and (more importantly) a lot of network bandwidth that we didn’t have, and (fatally) a lot of disk space we definitely didn’t have, making a backup copy of all data on the adjacent node. So the job was killed, the data cleaned up, the system reconfigured, the batteries swapped around, and the job restarted. It was getting late by this point, so we left it running and retired for the night.

In the morning, everyone’s first question was ‘Did it finish?’ and the answer was yes it did, in just over an hour.

Sorting 10Gb in just over an hour isn’t going to break any world speed records. However, the main limiting factor was not the limited CPU speed of the Raspberry Pi3, but the very limited network bandwidth available. The whole cluster was connected via WIFI to a miniature router designed to be a range-extender.

But it worked and the whole sort was done under battery power from a collection of USB power bricks!

What we learnt from this experience…

Firstly, it was a pleasant surprise to see how easily the port to the Raspberry Pi3 went. This isn’t the first time we have run HPCC Systems on an ARM platform. We had it running on a Chromebook a couple of years back. But it was great to see everything still worked so smoothly.

Secondly, looking at the breakdown of where the time went showed that the limiting factor for this job was not CPU power but network bandwidth. This supports my long-held view that at some point in the next few years, the power budget of the machines we use in our datacenter is going to be more significant than the purchase cost or floor space requirements. If I’m right, we may well be using ARM-based hardware on full-sized clusters before too long.

Thirdly, it was amusing to reflect that these £30 pocket-sized computers were actually superior in spec in almost every way to the original ‘red boxes’ that we used to bring up the first predecessors of HPCC Systems 15 years ago.

And last but not least, the whole team enjoyed this experiment. It was a lot of fun!