Getting Queries and Data to the Cloud

Krishna Turlapathi has been a part of LexisNexis Risk Solutions since 2009. He is a Director of Software Engineering with the HPCC Systems Platform team and is currently working on optimizing Roxie. Krishna’s focus is improving Roxie in the cloud environment as well as helping internal and external customers setting up and moving ECL queries to cloud. In this blog Krishna will walk you through the steps to move your queries and data to the cloud.

*****************************************************************

There are several different design decisions in HPCC Systems that make operation of today’s HPCC Systems platform on the cloud a little complex. Particularly when it comes to deploying queries and data to HPCC Systems on the cloud, the usage and operation is designed to be similar to that of a bare metal system. (Refer to Richard Chapman’s blog on “HPCC Systems and the Path to the Cloud“)

The process of migrating or transitioning to the cloud based HPCC Systems platform should be seamless. Once you have setup an HPCC System on the cloud the next task is to get the data and ECL queries to the cloud environment.

Getting Queries to the Cloud environment

There are 2 traditional ways of getting queries on to HPCC Systems:

- Compiling queries from ECL codes

- Copying existing queries from another cluster

This is still true for the cloud environment.

First, let’s start with copying existing queries on to HPCC Systems in the cloud from either another cluster in the cloud or from a bare metal system.

To get your queries to the cloud you can either copy individual queries or the entire queryset. If you are copying existing queries from bare metal or another cluster to the cloud make sure you are running same or compatible version of the HPCC Systems platform on both the environments, otherwise you will have to recompile your queries on cloud.

To copy individual queries use the command:

ecl queries copy //<sourceIP>:<port>/<queryset>/<query> -s <targetIP> <targetCluster>To copy your entire query-set use the command:

ecl queries copy-set //<sourceIP>:<port>/<sourceCluster> <targetcluster> -s <targetIP> If you want to compile queries from your code repository or your local ECL code repository you can use your ECL IDE or command line or third-party tools such as Microsoft VS Code.

Use the following command to compile queries from your code repository or your local ECL code repository using the ECL IDE, command line, or third-party tools such as Microsoft VS Code.

ecl publish <target> <file> --name=<queryname>Using package files to get data onto cloud

Once you have your queries on the cloud, you’ll need to get your data to run the queries. You can do this using a package file. You can build your data in the cloud environment or copy your existing data.

To copy existing data to the could environment from an existing environment, using package files, you have 2 options, you can copy your existing package file or create and add a new package file.

If you want to copy an existing package map from bare metal or another cluster:

ecl packagemap copy //<sourceIP>:<port>/<PackageMapid> <targetCluster> -s <targetIP> --daliip=<daliIP>If you downloaded the package file to your local machine or want to use a new package file you created then you can do this by using the command:

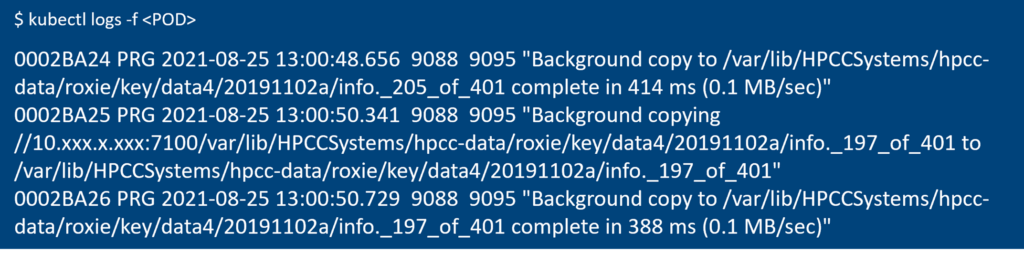

ecl packagemap add <pkgfilename> <targetCluster> -s <targetIP> --daliip=<daliIP>Once you have deployed your package file you can see the logs on the pod and notice data copy starting.

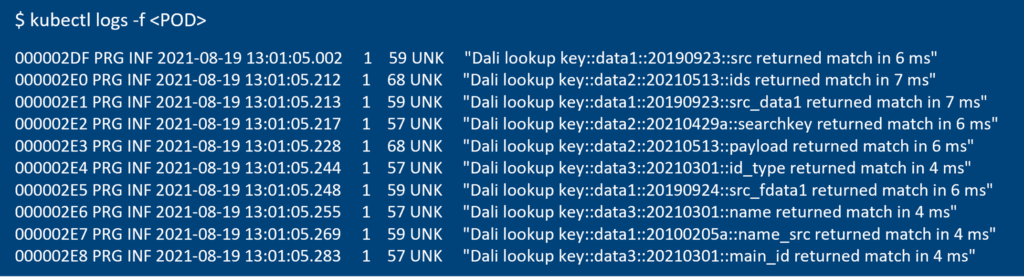

If you are updating a package file, assuming you are using a persistent storage, you will notice Dali lookup to verify the keys in you package file and file copying to copy new files.

Once the data and the queries are on the cluster you will be able to run your queries and update the data in the same manner it was done on the bare metal systems.

FAQs

Q: How do I get the target IP address for the cloud environment?

A: Run kubectl get svc

This will give you the external IP address for ECLWatch and other services along with the port information.

Q: How do I get the pod names?

A: kubectl get pods

Q: How do I check the logs?

A: kubectl log <podname> in addition you can use -f (follow) option to tail the logs.

Additional Information

Kubernetes commands: Cheat sheet