If you have read our previous blog on how to deploy the HPCC Systems cloud native platform using Terraform, you will find this blog easier to follow. In that blog, we go through each step to get you from zero to hero. If you haven’t read it and don’t know how to deploy the HPCC Systems platform in the cloud, please go through it because it will be used as an example of how the logging module works. A link to the Getting Started with the HPCC Systems Cloud Native Platform Using Terraform is also provided in the additional resources section of this blog.

Who is this module for?

Even though we will work with a .tf file to use this module, previous knowledge of Terraform is not needed. It is designed for anyone who is looking for a containerized log processing solution for HPCC Systems.

Before you begin

A little preparation is required to make sure you have everything needed to complete the tutorial in this blog.

You will need:

- A working computer that supports Linux, MacOS, or Windows OS.

- An Azure account with sufficient credits. Go to www.azure.com or talk to your manager if you believe your organization already has one that is available to use.

- A code editor of your choice. This blog uses VS Code.

- A running Azure Kubernetes Service.

- If deploying Azure Log Analytics Workspace, use the corresponding service principal below for:

- Tenant ID:

- Use the ID for the tenant of the Azure Active Directory registered app.

- Client / Application ID:

- Use the ID of the Azure Active Directory registered app.

- Client Secret / Application password:

- Use the secret string of the Azure Active Directory registered app.

- Principal ID:

- Use the ID of the Service Principal. To find it, please run the following command and replace <Service Principal name> with the name of your Service Principal: az ad sp list –filter “displayName eq ‘<Service Principal name>”.

- Tenant ID:

If the machine from which you intend to deploy the logging module is the same one you used to deploy the Azure Kubernetes Service with Terraform or you know that the requirements for this module are met, please skip to the next step. If not, you will need the following tools installed on your machine.

Install Terraform

Terraform is an Infrastructure as Code (IAC) tool from Hashicorp. The logging module is primarily written in Terraform.

Please note: Terraform version 1.3.3 or later is required. This link can be used to access the installation instructions.

Installing VS Code (Optional)

Visual Studio Code is a code editor used in this tutorial to edit the configuration files. If you do not already have VS Code installed, use this link for setup instructions, or you may prefer to continue to use your editor of choice.

If you intend to deploy Terraform from your command line interface, please make sure that you are logged into Azure and the active account is the one that you intend to use. With the Azure CLI installed, this command can be used to list the active account:

- az account list | grep -A 3 ‘”isDefault”: true’.

In addition, the following command can be used to select the correct account:

- az account set –subscription <my-subscription-name>

Provided Log Solutions

The terraform-azurerm-hpcc-logging module provides two containerized log processing solutions: The Elastic4HPCCLogs and Azure Log Analytics Workspace. In the following sections, we will dive into what they are and how to make use of them.

Please note: Choosing a log solution ahead of time is advised as switching from Azure Log Analytics Workspace to Elastic4hHPCCLogs might cause some hiccups and force you to redeploy Kubernetes. Also, keep in mind that higher cost can be incurred from using Azure Log Analytics Workspace as compared to Elastic4HPCCLogs .

Elastic4HPCCLogs

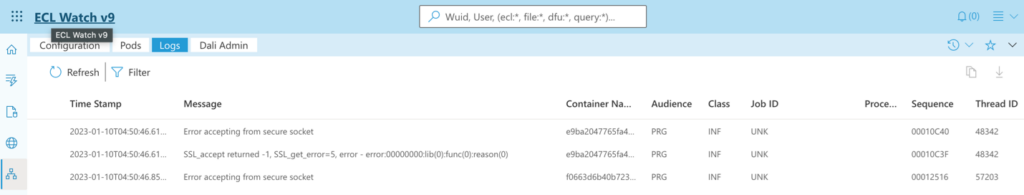

The Elastic4HPCCLogs is a lightweight Elastic Stack implementation for HPCC Systems that uses our log standard interface to process the logs of HPCC Systems components. The processed logs can be used for monitoring, benchmarking, auditing, debugging, etc. To index logs of HPCC Systems components on the Elastic Search endpoint, the Elastic4HPCCLogs must be deployed within the same namespace as the HPCC Systems components. Upon a successful completion of the deployment, the Elastic search indexed logs are made available on the ECLWatch (Figure 1), where users can query and filter log data.

Figure 1:

To deploy the terraform-azurerm-hpcc-logging module, we will add it to a .tf file. We can choose to add it to our main.tf or an entirely new file. For the purpose of this example, we will add it to our existing main.tf file, from where the Azure Kubernetes Service module is called, as follows:

module "logging" {

source = " https://github.com/hpcc-systems/terraform-azurerm-hpcc-logging.git?ref=v2.0.0"

elastic4hpcclogs = {

internet_enabled = true

name = "myelastic4hpcclogs"

timeout = 900

wait_for_jobs = true

version = “1.2.10”

}

hpcc = {

namespace = “default”

version = “8.12.0-rc1”

}

}For additional supported arguments and their definitions, please see the README.md. It is also worth noting that additional values can be passed to your HPCC Systems platform deployment to better tailor your log configuration to your need.

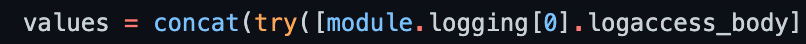

To connect to the HPCC Systems log interface, we need to provide the logaccess configuration to the HPCC Systems platform Helm deployment. A very good example of that can be found in terraform-azurerm-hpcc/main.tf/helm_release.hpcc as shown in (Figure 2) below.

Figure 2:

After adding the module to the .tf file, assigning the logaccess body to the HPCC Systems platform Helm deployment and saving it, you need to initialize your new configuration for Terraform to download the terraform-azurerm-hpcc-logging module.

The command to do that is: terraform init.

Following the initialization of the module, we can now deploy it by running this command: terraform apply.

After reviewing the plan for the upcoming actions that Terraform will take, type yes followed by the Enter key to accept. If the deployment succeeds, you should be able to read logs from ECLWatch as shown in (Figure 1) above.

Azure Log Analytics

Azure Log Analytics is a tool in the Azure portal to edit and run log queries from data collected by Azure Monitor logs and interactively analyze their results. You can use Log Analytics queries to retrieve records that match particular criteria, identify trends, analyze patterns, and provide various insights into your data. With Log Analytics for HPCC Systems, logs are ingested from the HPCC Systems platform and Kubernetes, stored and queried by different services such as ESP and Azure Insights. The usage cost for Log Analytics is based on data ingestion and retention period, but not on the creation of the workspace. To learn more on Azure Log Analytics, please visit this link.

Please note: It is encouraged that you have a dedicated resource group for Azure Log Analytics Workspace to better manage cost.

For the Azure Log Analytics, we will use the same block we added to the .tf file in Elastic4HPCCLogs tutorial. However, we will replace the elastic4hpcclogs child object as follows:

module "logging" {

source = " https://github.com/hpcc-systems/terraform-azurerm-hpcc-logging.git?ref=v2.0.0"

azure_log_analytics_workspace = {

unique_name = true

internet_ingestion_enabled = true

internet_query_enabled = true

location = “eastus”

name = “myloganalyticsworkspace”

resource_group_name = “”

}

Should be set as a secret environment variable or stored in a key vault

azure_log_analytics_creds = {

AAD_CLIENT_ID = “”

AAD_CLIENT_SECRET = “”

AAD_TENANT_ID = “”

AAD_PRINCIPAL_ID = “”

}

hpcc = {

namespace = “default”

version = “8.12.0-rc1”

}

}

Upon a successful completion of the deployment, the module will output a few attributes. The attributes that interest us the most are the workspace_resource_id and the logaccess_body.

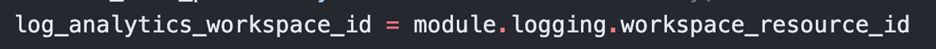

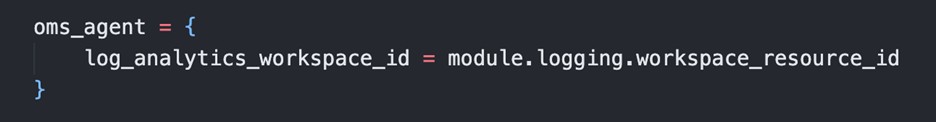

We need to assign the value of the workspace_resource_id attribute to the OMS Agent of the Azure Kubernetes Service to allow Kubernetes to send logs to the workspace.

Similarly, we also need to assign the logaccess_body to the HPCC Systems platform Helm deployment to connect to the HPCC Systems platform log interface (Log Access) . See (Figure 2).

Both assignments can be done prior to the terraform apply but not before having a running AKS.

If you are using our example repository, terraform-azurerm-hpcc, the workspace_resource_id should be assigned in the admin.auto.tfvars file as shown in (Figure 3) below. In addition, a secondary terraform apply is needed for the change to be made on the Kubernetes cluster. The configuration of the OMS Agent can instead look like (Figure 4) for your particular AKS module or AKS resource.

Figure 3:

Figure 4:

By following the above tutorial, HPCC Systems platform components logs should be visible on the ECLWatch log tab.

Additional Resources

- HPCC Systems Platform Logging

- Azure Log Analytics Workspace

- HPCC Systems Platform Terraform Examples

- BLOG: Getting Started with the HPCC Systems Cloud Native Platform Using Terraform

Meet the author

Godji Fortil, Software Engineer III

Godji Fortil works as a software engineer III in the HPCC Systems platform team. He primarily works on testing and cloud infrastructure for the HPCC Systems platform. Godji has extensive experience coding in Terraform having deployed his first application in OpenStack over two years ago. He also has been an internship mentor since he joined the company. This year, his mentorship was about cost management and optimization for the HPCC Systems cloud native platform. Next year, Godji hopes to attend Georgia Tech University for a master’s in computing systems.