An integral part of the HPCC Systems Academic Program, throughout the years is directly supporting academic research and co-authored papers with different academic partners around the globe (view a complete list of academic publications). A recent example is the paper titled, “Local Outlier Factor for Anomaly Detection in HPCC Systems“, co-authored by Arya Adesh, Shobha G, Jyoti Shetty, and Lili Xu, which presents a sophisticated study of anomaly detection in distributed computing environments. The primary contribution of this paper is the implementation of an optimized version of the Local Outlier Factor (LOF) algorithm on the HPCC Systems platform, tailored to detect anomalies in large datasets.

Background

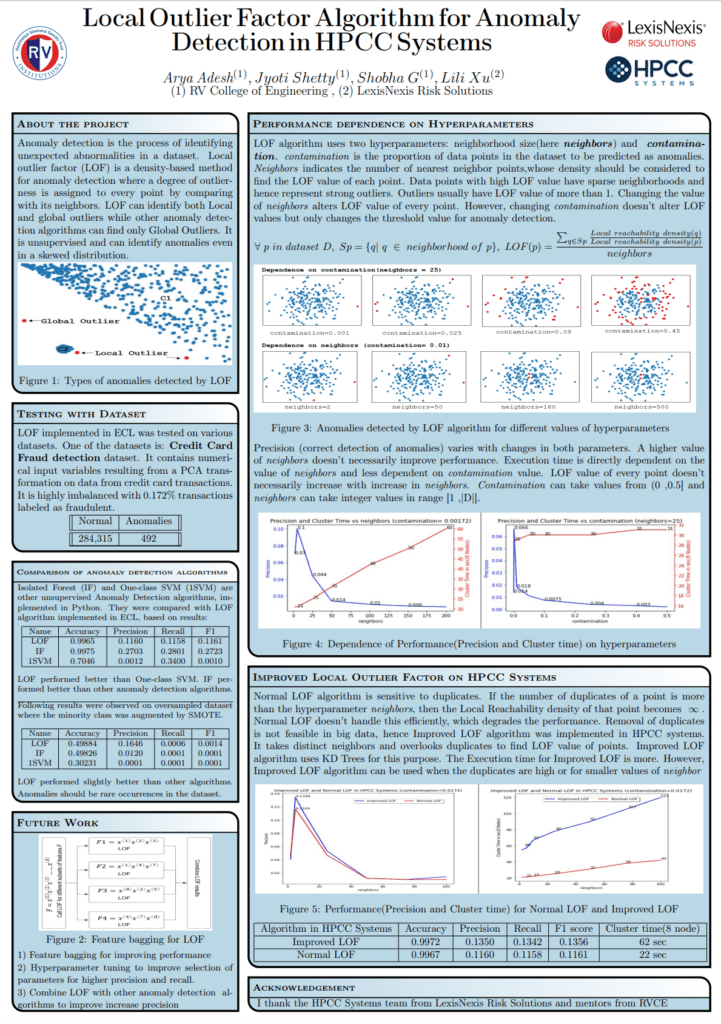

This research is a result of Arya Adesh’s initiative during his 2022 HPCC Systems internship, where he proposed and developed a novel solution to an important problem in big data analytics. Back then, as a Bachelor of Computer Science and Engineering student at RV College of Engineering (RVCE) in India, Arya became interested in anomaly detection, a critical field in data science, particularly for fraud detection and security applications. Arya was specifically interested in LOF, a density-based anomaly detection algorithm that assigns a degree of outliers to each point in the dataset. Despite being capable of finding both global and local outliers, LOF is most suitable for unevenly distributed datasets as it doesn’t make assumptions about this distribution and the determination of outliers is based on the density between each data point and its neighbor points.

Arya’s proposal to implement and enhance the LOF algorithm for anomaly detection on the HPCC Systems platform was accepted as a research project and was mentored by a truly global team composed of Lili Xu, Senior Software Engineer at LexisNexis Risk Solutions in the United States, alongside Jyoti Shetty, Assistant Professor of Computer Science and Engineering at RV College of Engineering, and Shoba G, Dean of School of Computer Science and Engineering at RV University, both located in India. One of Arya´s significant contributions during this internship was the development of a scalable anomaly detection algorithm that can be run in an optimized fashion with the HPCC Systems platform.

If you want to know more about Arya´s internship project, please access the wiki page of his project which includes a short recorded presentation of his poster and his blog journal that contains a more in-depth look at his work.

The research paper

The complete results of Arya´s research have been published by the Journal of Parallel and Distributed Computing and the first electronic version of the paper is already available for general access.

As its title implies, the paper explores the usage of the LOF algorithm for anomaly detection in HPCC Systems with potential contributions in the areas of scalability, duplicate handling, and benchmarking of the LOF algorithm.

Next is a summary of the key points of Arya´s research covered in the paper. While this blog-style summary condenses the technical and implementation details into an accessible format, you are also encouraged to access the full paper for the complete details.

Anomaly Detection

The paper focuses on detecting anomalies, which are unusual patterns in data that could indicate critical events like fraud or security threats. The LOF is a well-known unsupervised anomaly detection algorithm that is particularly suited for this task. The algorithm assesses the local density of data points to identify outliers where points with lower local density compared to their neighbors are considered outliers. The challenge, however, was to implement this algorithm in a distributed computing environment like HPCC Systems, which is specifically designed for big data analytics.

Implementation in HPCC Systems

Arya successfully adapted the LOF algorithm to the HPCC Systems platform, leveraging its distributed computing architecture to handle large-scale datasets. As discussed before, the LOF algorithm works by calculating the local density of each data point in comparison to its neighbors, assigning an anomaly score to each point. Arya’s contribution optimized the algorithm to run efficiently on HPCC Systems, ensuring it scales well with the increasing size of datasets, making it suitable for real-time anomaly detection.

Improved LOF

One of the major limitations of the traditional LOF algorithm is its sensitivity to duplicate data points, which can distort density calculations and lead to inaccurate anomaly detection. Arya addressed this problem by proposing the Improved LOF Algorithm that focuses on two main areas:

- Handling Duplicates: The Improved LOF algorithm overcomes the challenge of duplicates by using a k-d tree-based method to identify unique neighbors for each data point. Instead of relying on raw data densities, which could be skewed by duplicate entries, the Improved LOF algorithm ensures that only unique neighbors are considered for density calculations. This greatly enhances the accuracy of anomaly detection, particularly in large datasets where duplicates are common.

- Segmented and Unsegmented k-d Tree Methods: To efficiently calculate the nearest neighbors, Arya proposed the segmented and unsegmented k-d tree approaches. The segmented method distributes the dataset across multiple nodes in the cluster, builds local k-d trees, and then combines the results to obtain global nearest neighbors. The unsegmented method, on the other hand, builds a k-d tree using the entire dataset and queries it for each data point across all nodes.

Comparative Analysis

The paper also compares the performance of the LOF algorithm with other anomaly detection algorithms like COF (Connectivity-based Outlier Factor), LoOP (Local Outlier Probability), and kNN (k-Nearest Neighbors) across various datasets. The results show that LOF offers better precision and scalability in detecting anomalies, especially in distributed computing environments.

From an execution platform perspective, Arya conducted experiments to compare the performance of the LOF implementation in HPCC Systems with different big data platforms, including Hadoop and Spark. LOF in HPCC Systems was found to be more efficient, particularly with larger datasets.

Contributions

The LOF algorithm, especially its improved version, demonstrates high efficacy in detecting anomalies in big data environments like HPCC Systems. The study highlights the algorithm’s potential for various real-world applications, including fraud detection, network security, and quality control. This work not only benefits the HPCC Systems community but also has broader implications for various industries relying on efficient, distributed anomaly detection systems.

Through his internship and subsequent research, Arya not only deepened his understanding of distributed systems but also delivered a highly efficient version of the LOF algorithm. His work demonstrated a novel approach to handling duplicates in large datasets and improved the performance of the algorithm on the HPCC Systems platform. Arya’s dedication and technical insights have led to a valuable contribution to both the academic community and the HPCC Systems platform.

About the authors

Arya Adesh is currently pursuing a Bachelor’s degree in Computer Science and Engineering at RV College of Engineering. He joined the HPCC Systems Intern Program in 2022, where he implemented anomaly detection algorithms for big data. Additionally, as a research intern at NIT Trichy, he worked on ontologies and developed algorithms for Web 3.0. He has undertaken several industry-relevant projects in image processing, deep learning, and web development.

Shobha G is Dean of the School of Computer Science and Engineering at RV University, Bengaluru, India have teaching experience of 27 years, her specialization includes Data mining, Machine Learning and Image processing. She has published more than 150 papers in reputed journals and conferences. She has also executed sponsored projects worth INR 300 lakhs funded by various agencies nationally and internationally.

Jyoti Shetty is an Assistant Professor at the Computer Science and Engineering Department at RV College of Engineering in Bengaluru, India. She has 17 years of teaching and 2 years of industry experience. Her specialization includes Data Mining, Machine Learning, and Artificial Intelligence. She has published 50+ research papers in reputed journals and conferences. She has also executed sponsored projects funded by various agencies nationally and internationally. She has delivered expert talks at various IT Industries.

Lili Xu is a Software Engineer working at LexisNexis Risk Solutions. She has completed her PhD from Clemson University in 2016. Lili has also completed three consecutive internships with HPCC Systems before joining LexisNexis Risk Solutions as a full-time employee. She has published several research papers in reputed conferences and journals.