HPCC Systems on the Google Cloud Platform

HPCC Systems is going cloud native, and the cloud development team has been active with projects related to the new” HPCC Systems cloud native platform.” The operating environment for the new platform consists of Docker containers managed by Kubernetes. These containers are deployed by Helm charts on various cloud platforms, such as Azure (AKS), Amazon Web Services (AWS), and Google Cloud.

Jefferson “Jeff” Mao, a 12 grade high school student at Lambert High School in Suwanee, Georgia, presented his work, “HPCC Systems on the Google Cloud Platform,” during HPCC Systems Tech Talk 35. The full recording of Jeff’s Tech Talk is available on YouTube.

Jefferson “Jeff” Mao, a 12 grade high school student at Lambert High School in Suwanee, Georgia, presented his work, “HPCC Systems on the Google Cloud Platform,” during HPCC Systems Tech Talk 35. The full recording of Jeff’s Tech Talk is available on YouTube.

During his summer internship, Jeff worked with Xiaoming Wang to set up an HPCC Systems cluster on the “cloud native platform.” He specifically designed the web application used to create a new HPCC Systems cluster on the Google Cloud platform. Jeff also evaluated the new Google Anthos GKE platform, and ran test programs to see how they perform in comparison to HPCC Systems’ bare metal version. Jeff ran regression tests and added new ones where necessary for areas that are cloud specific, such as scaling. He also tested HPCC Systems Helm charts for the “cloud native platform,” contributing useful feedback that has contributed to improvements in performance.

More information on Jeff’s work can be found in Jeff’s blog journal.

In this blog, we discuss:

- Introduction to the Google Kubernetes Engine (GKE)

- HPCC Systems Native Cloud Development

- HPCC Systems Cluster Deployment and Basic Tests

- GKE Autoscaling

- Introduction to Anthos

- Introduction to Istio Service Mesh

- Logging on to the Google Cloud Platform

- Monitoring GKE Clusters with Anthos

- Monitoring Non-GKE Clusters with Anthos

Let’s begin by introducing the Google Kubernetes Engine (GKE).

Introduction to the Google Kubernetes Engine (GKE)

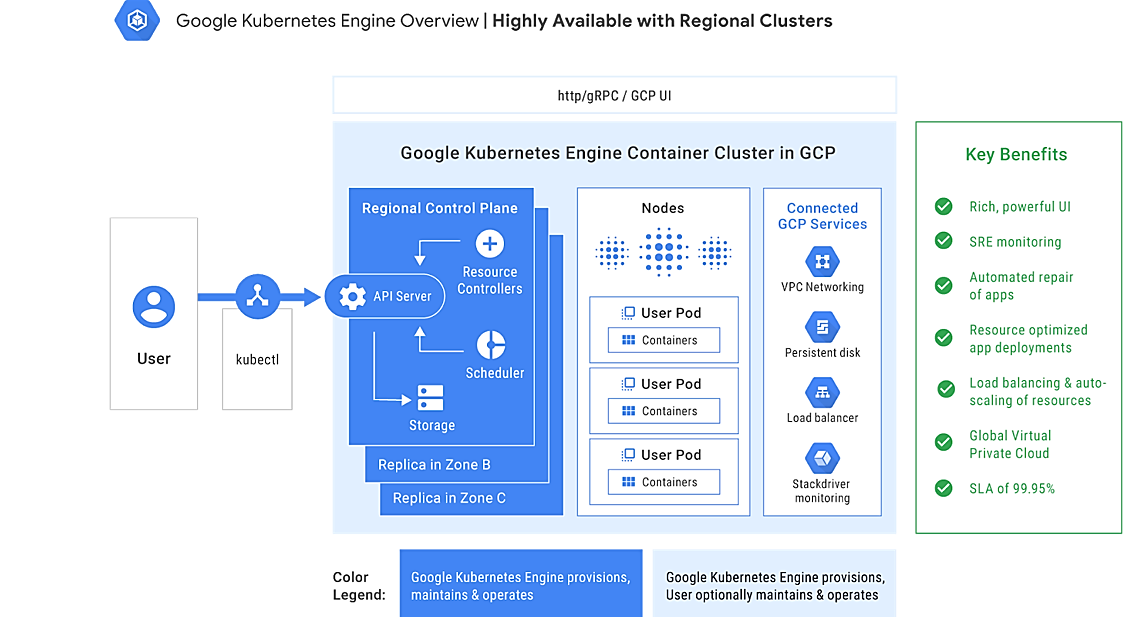

Google Kubernetes Engine (GKE) provides a managed environment for deploying, managing, and scaling containerized applications using the Google infrastructure. The GKE environment consists of multiple machines grouped together to form a cluster.

Google Kubernetes Engine has many features and benefits, including Kubernetes CLI support, load balancing, auto scaling, and cost efficiency. Downtime is minimal, and this engine has almost 50 times more nodes per cluster. Users also benefit from Google cloud platform features like Anthos, app engine monitoring, and logging.

GKE Features

Next, we will move on to HPCC Systems Native Cloud platform development, and deployment of the platform to the cloud via the Google Kubernetes Engine (GKE). We will also discuss testing and GKE autoscaling.

HPCC Systems Native Cloud Development

Overview of the HPCC Systems Native Cloud Platform

The initial version of the HPCC System native cloud platform was released in version 7.8.0. Since then, there have been several releases of the cloud platform with updates. The current release of the HPCC Systems cloud native platform is available for download. More information about the native cloud platform can be found on the HPCC Systems Cloud Native Wiki Page.

Deployment to GKE

With the development of the HPCC Systems cloud native platform, deploying HPCC Systems to the cloud is now a much easier process. Previous methods of deploying HPCC Systems to the cloud were through virtual machines.

With the development of the HPCC Systems cloud native platform, deploying HPCC Systems to the cloud is now a much easier process. Previous methods of deploying HPCC Systems to the cloud were through virtual machines.

Scripts are available on GitHub to streamline the process. To access these scripts go to: https://github.com/HypePhilosophy/hpcc-gcp. Instructions are available on the Readme page.

To deploy HPCC Systems cloud native platform, clone hpcc-init and hpcc-remove shell scripts onto your project root path, and run ./hpcc-init to deploy the project onto GKE, or ./hpcc-remove to delete the existing project.

HPCC Systems Cluster Deployment and Basic Tests

The following tests were performed for deployment of the HPCC Systems platform thru Google Kubernetes:

ECL Playground Samples

ECL playground samples were tested through the command line, with a 100% success rate. Expected results were received.

ECL Watch Data Tutorial

Tests from the ECL Watch data tutorial were run. The tutorial was provided by the Boca Raton documentation team. Most of the tasks from the tutorial were able to be completed, with the following exceptions:

- Spraying landing zones will not work. Users will need to copy the data to the shared persistent volume.

- Publishing queries through the ECL browser is not supported

For testing documentation go to the following link:

https://hpccsystems.com/training/documentation/learning-ecl

The testing documentation can be found under the heading, “HPCC Systems Data Tutorial: with an Introduction to Thor and ROXIE.”

Regression Tests

Regression tests were run on Google Kubernetes with expected results. There were a few failures and errors, and the development team is currently working through the issues.

Now we will look an important feature of GKE, the Google Kubernetes Engine Autoscaler.

GKE Cluster Autoscaler

What does the GKE Cluster Autoscaler do?

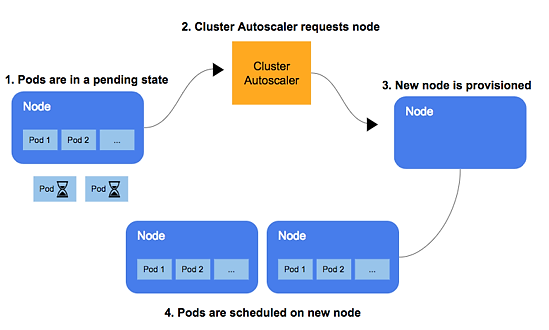

The GKE Cluster Autoscaler automatically resizes the number of nodes per a given node pool. The threshold is set by Google Kubernetes based on the demands of the workload, which is triggered by constraints set by the user.

Using the GKE Cluster Autoscaler

To use the GKE cluster autoscaler, the user must enable “cluster autoscaling” and set the minimum and maximum number of nodes per node pool. The remainder of the configuration will be set automatically. The cluster auto scaler is very useful because it automatically removes, adds, or over-provisions node pools, mitigating errors and cost resource overalls.

To use the GKE cluster autoscaler, the user must enable “cluster autoscaling” and set the minimum and maximum number of nodes per node pool. The remainder of the configuration will be set automatically. The cluster auto scaler is very useful because it automatically removes, adds, or over-provisions node pools, mitigating errors and cost resource overalls.

Horizontal/Vertical Autoscaler

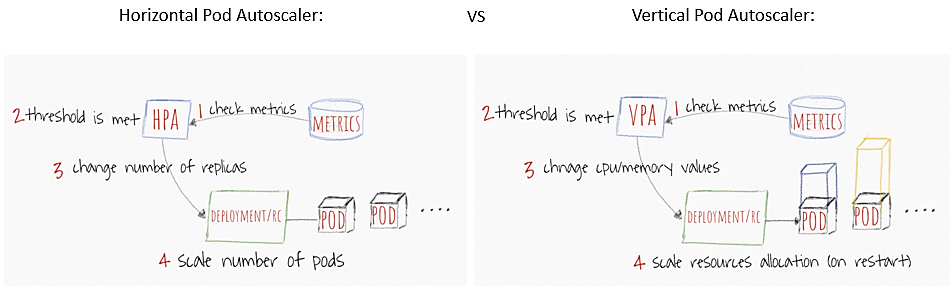

Google’s horizontal autoscaler works in different stages.

The horizontal autoscaler:

1. Checks metrics and usage.

2. Ensures that the threshold set by Google has been met.

3. Changes the number of replicas (identical pods)

4. Deploys these changes from the set threshold or the automatic sort of recommendations that Google makes, and scales the number of pods.

Google’s vertical auto scaler works quite similarly to the horizontal auto scaler, except instead of increasing the number of pods it increases the amount of CPU and memory per pod. The vertical autoscaler can be configured to give recommendations or make automatic changes to cpu and memory requests.

Horizontal and vertical autoscaling mitigates resource issues and other problems when HPCC Systems Roxie and ECL Watch experience high traffic.

The image below is a visual representation of the different stages of horizontal and vertical autoscaling

Another feature in the Google Cloud portfolio is Google Cloud Anthos. This software provides a single, consistent way of managing Kubernetes workloads across on-premises and public cloud environments

Introduction to Anthos

About Anthos

Anthos is a platform that allows the user to develop and run applications in Google Cloud, and across on-premises and other public cloud environments. This platform simplifies the development, deployment, and operation of applications across hybrid Kubernetes deployments and multiple public cloud environments by linking incompatible cloud architectures. Anthos also allows for policy enforcement, as well as service, cluster, and infrastructure management. Anthos comes with a variety of customizable components to help users with application needs.

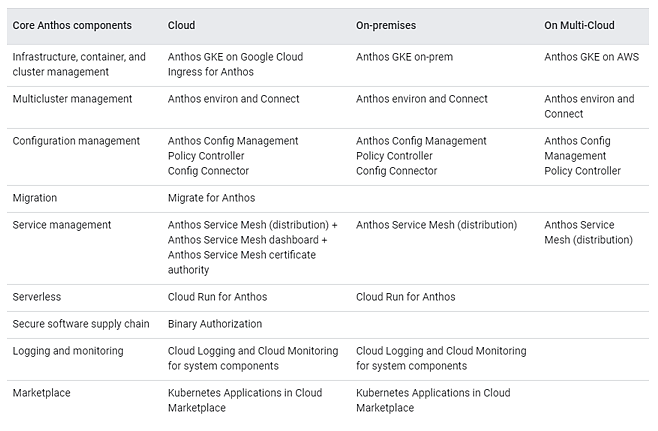

Anthos Components

The chart below shows a list of core Anthos components.

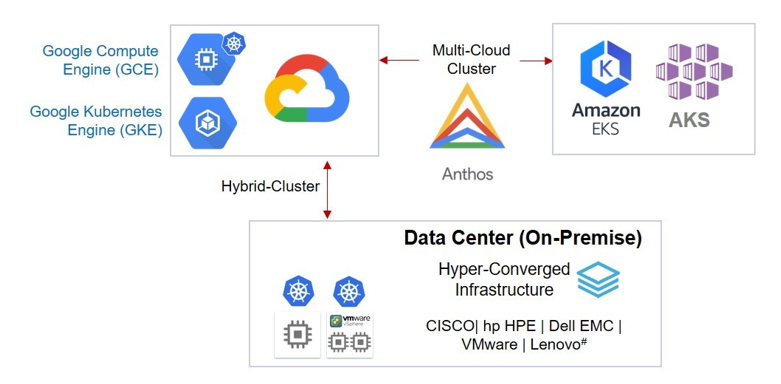

Anthos Multi-Cloud Cluster Management

The diagram below is a visual depiction of Anthos multi-cloud cluster management. Three types of clusters are seamlessly controlled by Anthos.

HPCC Systems Multi-Cloud Examples

HPCC Systems can function on multiple clouds in a way where one cluster can be set for production another for testing and a final cluster for development. In Azure, HPCC Systems takes the majority of the HPCC pods in one cluster, Thor pods in a second cluster, and the third and final cluster is for ECL Watch services.

Please note that clusters will generally stay in the same cloud environment. Clusters can be separated into different cloud environments, but performance may be impacted.

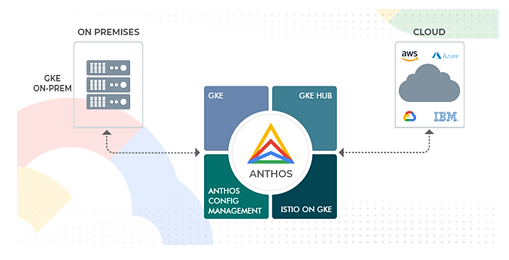

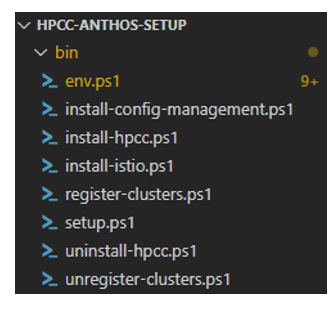

Anthos Setup

Scripts were created to install Anthos components, HPCC Systems, and to register each cluster. These scripts can be found in Github: Script source: https://github.com/HypePhilosophy/HPCC-Anthos-Setup. Please follow the readme in the Anthos setup repository for instructions.

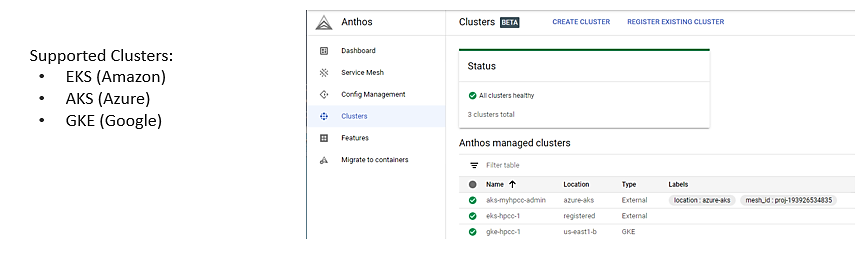

Registered Anthos Clusters

Kubernetes clusters for HPCC Systems can be registered through Google Cloud SDK for AKS (Azure Kubernetes Service), EKS (Amazon Elastic Kubernetes Service), and GKE (Google Kubernetes Engine). It is also possible to register on-premises clusters.

Registered clusters can be managed through Anthos, which has a number of features, including Ingress, Config Management, Service-Mesh, Cloud Run, etc.

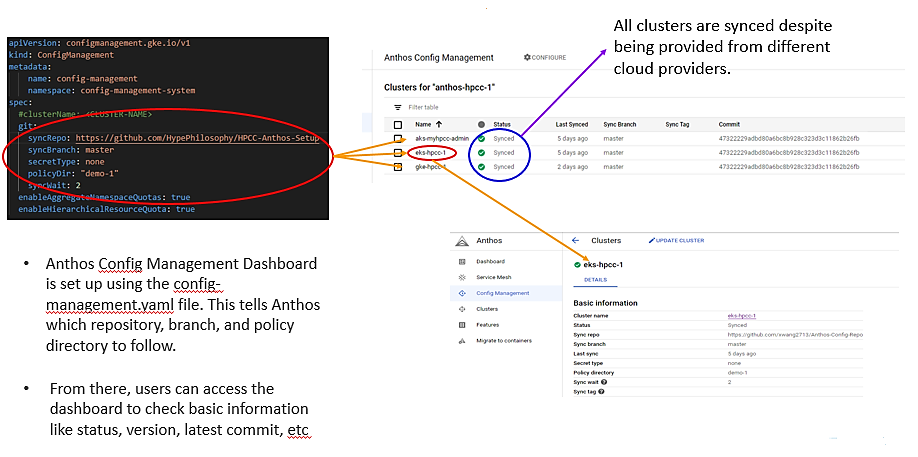

Anthos Config Management

Anthos config management is a service that allows the user to create a common configuration across an infrastructure (including custom policies) and apply it across cloud and on-premises platforms.

Anthos config management evaluates changes and rolls them out to all Kubernetes clusters so that the desired state is always reflected.

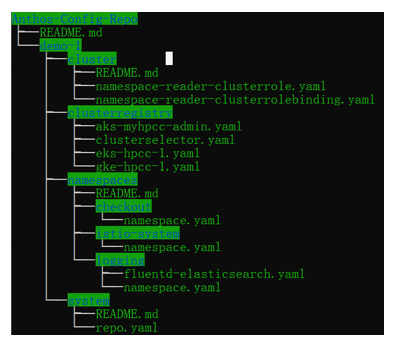

There are two types of repositories: unstructured and structured. Structured repositories are easier to work with.

The default repository structure for structured repositories consists of the following:

Default Repository Structure:

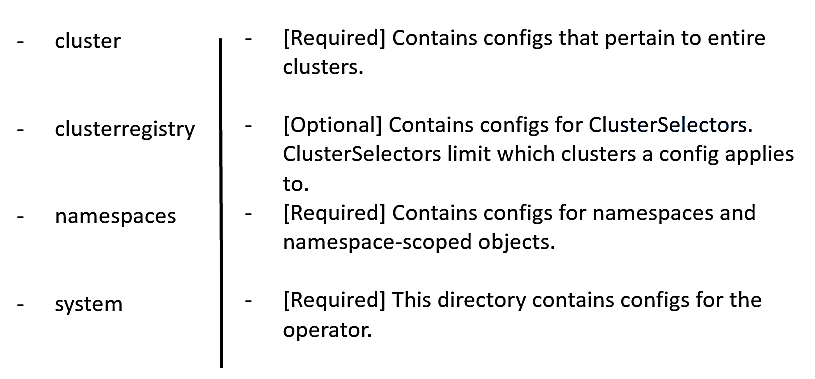

Anthos Config Management Dashboard

The Anthos Config Management dashboard enables the user to automate policy and security at scale in hybrid cloud and on-premises container environments, through the cloud console. Anthos config management is a simple way to implement configurations through code. It is set up to use the config-management.yaml file. This tells Anthos which repository, branch, and policy directory to follow.

From there, users can access the dashboard to check basic information like status version, latest commit, etc. All clusters are synced and controlled through Anthos, as seen in the image below.

How to Utilize Anthos Config Management

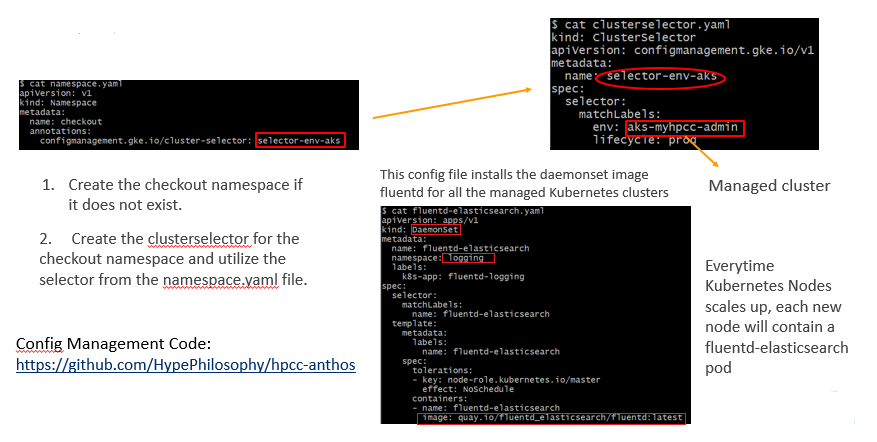

This example shows how to utilize Anthos Config Management. The image below outlines the steps to create a namespace and a fluentd-elasticsearch pod for all clusters.

Managing multi-cloud deployments is challenging and can become very complex. Istio is a platform that addresses these challenges and helps reduce the complexity of the deployments.

Istio Service Mesh

What is a Service Mesh?

A service mesh is an infrastructure layer that enables managed, observable, and secure communications across all services. It is a microservice structure that enables the user to manage requests and internet traffic.

What is Istio?

Istio is a powerful and highly configurable open source service mesh platform that features many tools and services.

Installation

To install Istio, run the ./install-istio.ps1 script from the HPCC-Anthos-Setup Github.

https://github.com/HypePhilosophy/HPCC-Anthos-Setup

Istio and HPCC Systems Cluster Install

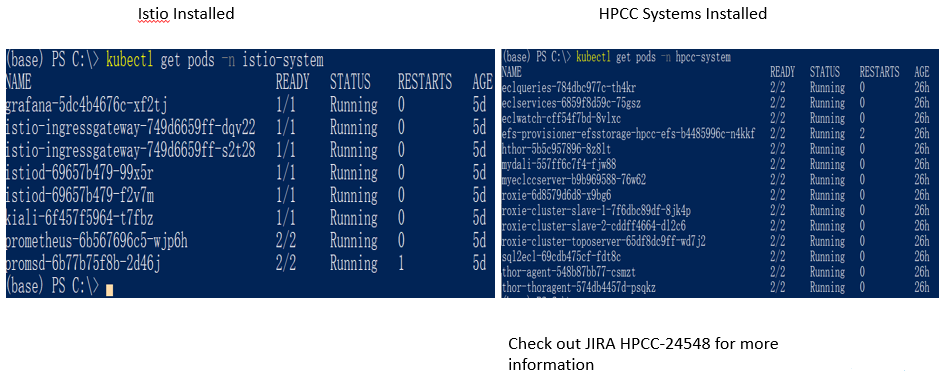

Once Istio has been installed, it should be verified using the command C:>kubectl get pods –n Istio-system, as shown on the left side of the image above. This is a command for Kubernetes to list all pods from the Istio system namespace. After installing the HPCC systems cluster, confirm that Istio is working properly by listing the plots, as seen on the right side of the image below. If 2/2 shows in the READY column, the Istio setup was successful. There are two containers to a pod because of Istio Sidecar. Istio Sidecar is a utility container that regulates and controls network communications between microservices. In this example one container is for the service, and the other one is for monitoring the service.

There are some issues with Istio Sidecar. Since Istio Sidecar is always on, when the main process finishes the job continues to run. A case has been opened in JIRA in hopes of creating a workaround for this bug. For more information see JIRA HPCC-24548.

Ingress Gateway Configuration

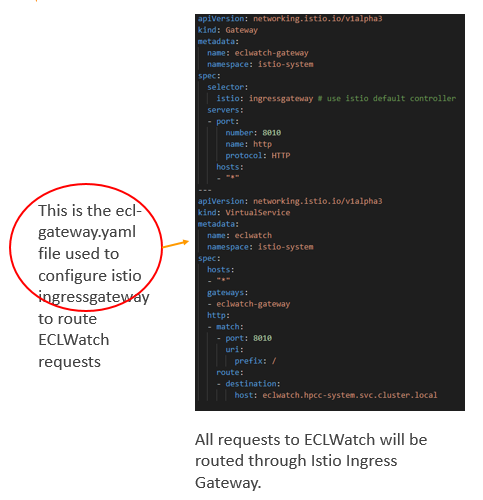

Istio/Ingress Gateway is a gateway network management system that defines rules for routing external HTTP/TCP traffic to services in in the Kubernetes cluster. It provides more tools and functionalities for routing when compared to the basic load balancer. An example usage for the Istio/Ingress Gateway is routing 20% of all requests to a new version while keeping 80% of requests on a stable version.

The benefits of Ingress Gateway are seen in its ability to control every aspect of internet traffic. The user is able to control security authorization and networking to different versions. It also has very competitive response times, making it one of the best gateway management systems on the market.

The image below shows the setup for the ingress gateway.yaml. This is configured through config management.

Now let’s discuss logging on the Google Cloud platform.

Logging on to the Google Cloud Platform

There are a wide variety of native in-house Google Kubernetes logging tools avaible to users. Anthos adds to this by allowing more detailed 3rd party metrics and graphs to be used.

If third party solutions are not enough, users can opt to create their own graphs and charts through Google’s intuitive console UI.

The GKE Logging and Monitoring Dashboard shown below provides detailed information on specific services inside GKE. Each service can be expanded to show logs, graphs, and errors. This only works on GKE clusters.

Monitoring GKE Clusters with Anthos

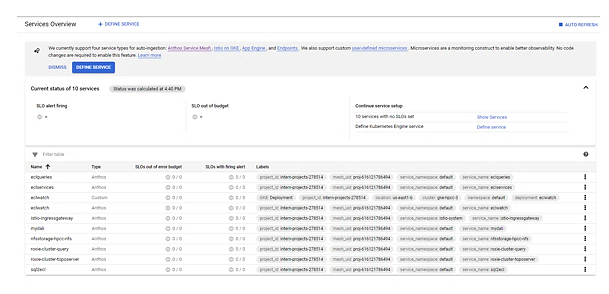

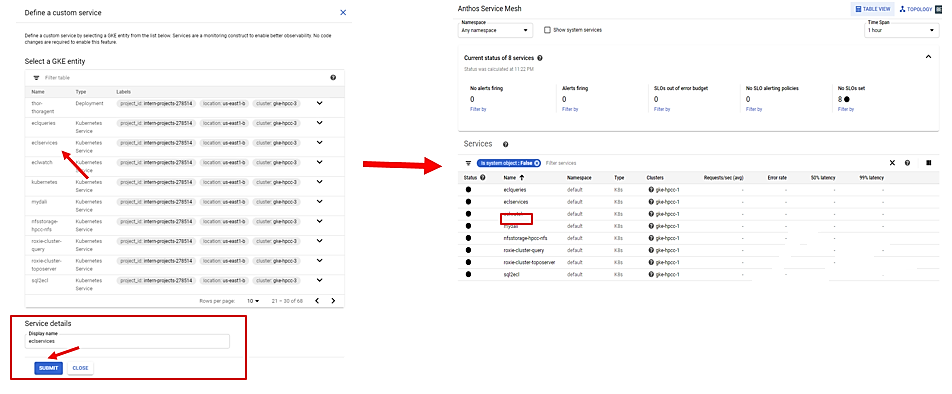

Google provides a vast array of options when monitoring GKE clusters. One of the more popular ways is through Antho Service Mesh. In Anthos Service Mesh the user is not limited by the default listed services. The user can choose his/her own services to monitor.

To monitor a custom service:

1. From the Google cloud console select “Monitoring/Service”

2. Select “Define Service”

3. Select any entry out of the list

4. Submitted the service

5. That service will now appear in the Anthos Service Mesh Dashboard

6. From the Anthos Mesh Dashboard, the user is able to set service-level objectives.

The ability to set metrics on any service greatly benefits development of HPCC-related software.

Now let’s take a look at how to monitor non_GKE clusters with Anthos.

Monitoring Non-GKE Clusters with Anthos

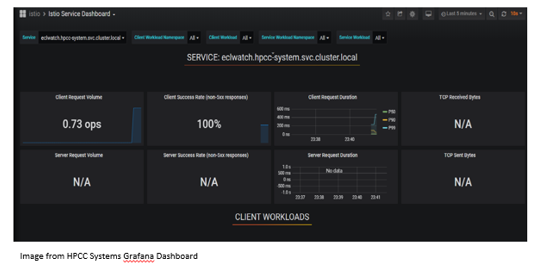

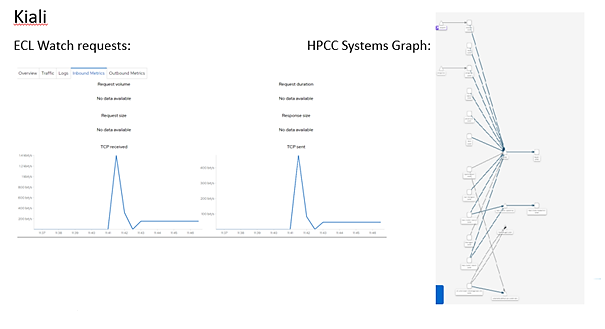

Third-party services, such as Grafana and Kiali, can be used to monitor non-GKE clusters . Users must define and apply configurations for each service. For example, to add Grafana the user must create a grafana_ gateway.yaml file.

Virtual services must be applied for each third-party solution. Solutions such as Grafana and Kiali utilize Prometheus metrics to provide seamless Google Anthos API integration for multi-cloud clusters.

Prometheus is a monitoring tool often used with Kubernetes. It can be toggled on and off and will show detailed information about clusters. It provides metrics to other tools.

***It is important to note that Prometheus, Kiali, and Grafana were not installed separately. These features were installed with Istio.

The image below shows how Kiali looks like when monitoring non-GKE HPCC clusters. The left image shows monitoring and logging tabs used to aid developers. The right image displays HPCC platform services and how they interrelate. When debugging, it is helpful to know where an error occurs and originates. It also simplifies an otherwise complicated setup.

The ability to monitor and log on the Google cloud platform is one of its greatest strengths.

Conclusions

- Anthos is a trend for multi-cloud Kubernetes management platforms.

- Useful components include:

– Configure Management

– Istio Envoy, Monitoring and Logging, etc.

- Anthos is still in development, i.e. the current lack of support for GKE on Azure.

- Since Kubernetes Cluster Management is important, HPCC Systems may evaluate and compare other technologies, such as Azure Stack/Arc, AWS Outpost, and Rancher, etc. to see which one fits HPCC Systems best.

- The Google Cloud Platform is versatile and continually expanding.

Useful Links

HPCC Systems Setup Scripts:

https://github.com/HypePhilosophy/hpcc-gcp

Anthos Component Setup Scripts:

https://github.com/HypePhilosophy/HPCC-Anthos-Setup

Anthos Config Management Files:

https://github.com/HypePhilosophy/hpcc-anthos

HPCC Systems blog:

https://hpccsystems.com/blog

Who is Jefferson “Jeff” Mao

Jefferson is a 12th grade high school student who has his eyes set on studying business at the University of Pennsylvania in the future. He is already something of an entrepreneur having founded Philosophy Robotics LLC, a software company that produces software for resellers such as automated checkout services, reselling tools, and web scraping applications. He heard about the HPCC Systems intern program when taking part in CodeDay Atlanta, where he was a Best in Show prize winner.

Acknowledgements

A special thanks goes to Jefferson Mao for his valuable contributions to HPCC Systems cloud native platform development, and to Xiaoming Wang for his guidance and leadership.